The purpose of Open Access Vis is to highlight open access papers and transparent research practices on persistent repositories outside of a paywall. See the about page and my paper on Open Practices in Visualization Research for more details.

Most visualization research is funded by the public, reviewed and edited by volunteers, and formatted by the authors. So for IEEE to charge $33 for each person who wants to read the paper is… well… (I’ll let you fill in the blank). This paywall as well as the general opacity of research practices and artifacts is contrary to the supposedly public good of research and the claim that visualization research helps practitioners who are not on a university campus. And this need for accessibility extends to all research artifacts for both scientific scrutiny and applicability.

This is part 1 of a multi-part post summarizing open practices in visualization research for 2019.

Related posts: 2017 overview, 2018 overview, 2019 part 2 – Research Practices, 2019 part 3 – Who’s who?

Updates for 2019

1. Persistent repositories only: Link rot has been a frustrating problem over the past couple years, so papers on personal sites and repositories are no longer included. Other research artifacts can still be on personal sites for now.

2. Arxiv instructions sent to all authors: VIS sent authors of accepted papers instructions for posting to arxiv.org. While the result was a large increase in paper sharing, Arxiv has serious usability problems. This gatekeeping prevented multiple people from completing the process. Luckily, plenty ignored the VIS instructions and used simpler options like OSF.

3. Splitting the Materials badge: The criteria for the materials badge includes both material needed for empirical replication and computational reproduction. Some people shared one but not the other, despite having both components in their research. So I’ve split the badge into experiment materials and computational materials to clarify the requirements and increase the likelihood of people being able to earn at least one. The combination of both materials badges is equivalent to the Center for Open Science’s Materials badge.

4. No hunting for materials: Previously, I would google for research artifacts not linked to from the paper. Since the goal is to make clear what readers have access to, and since it yielded very few additional findings in previous years, we stopped this practice. Now, only URLs from the paper are included.

5. New URL: The site is now at http://oavis.org

Archived papers for 2019

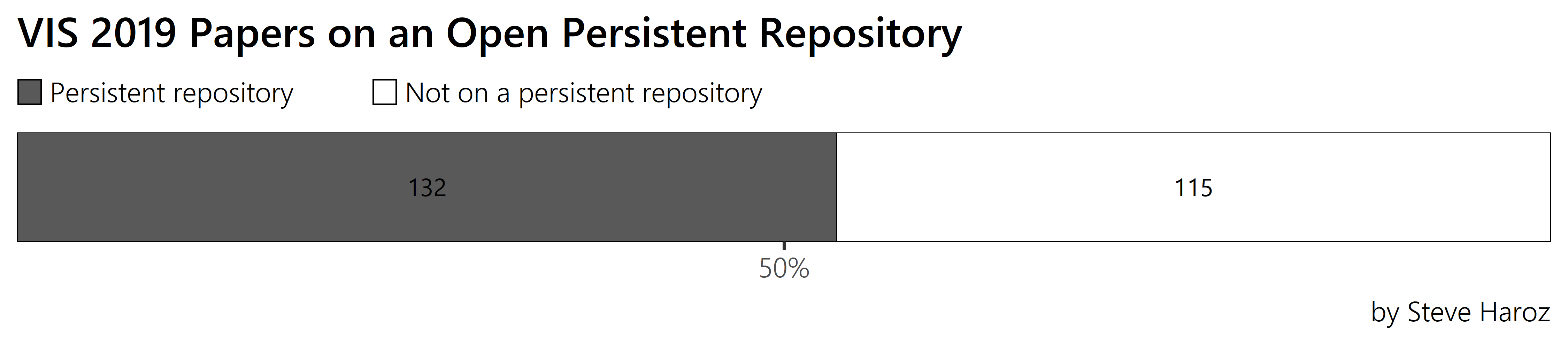

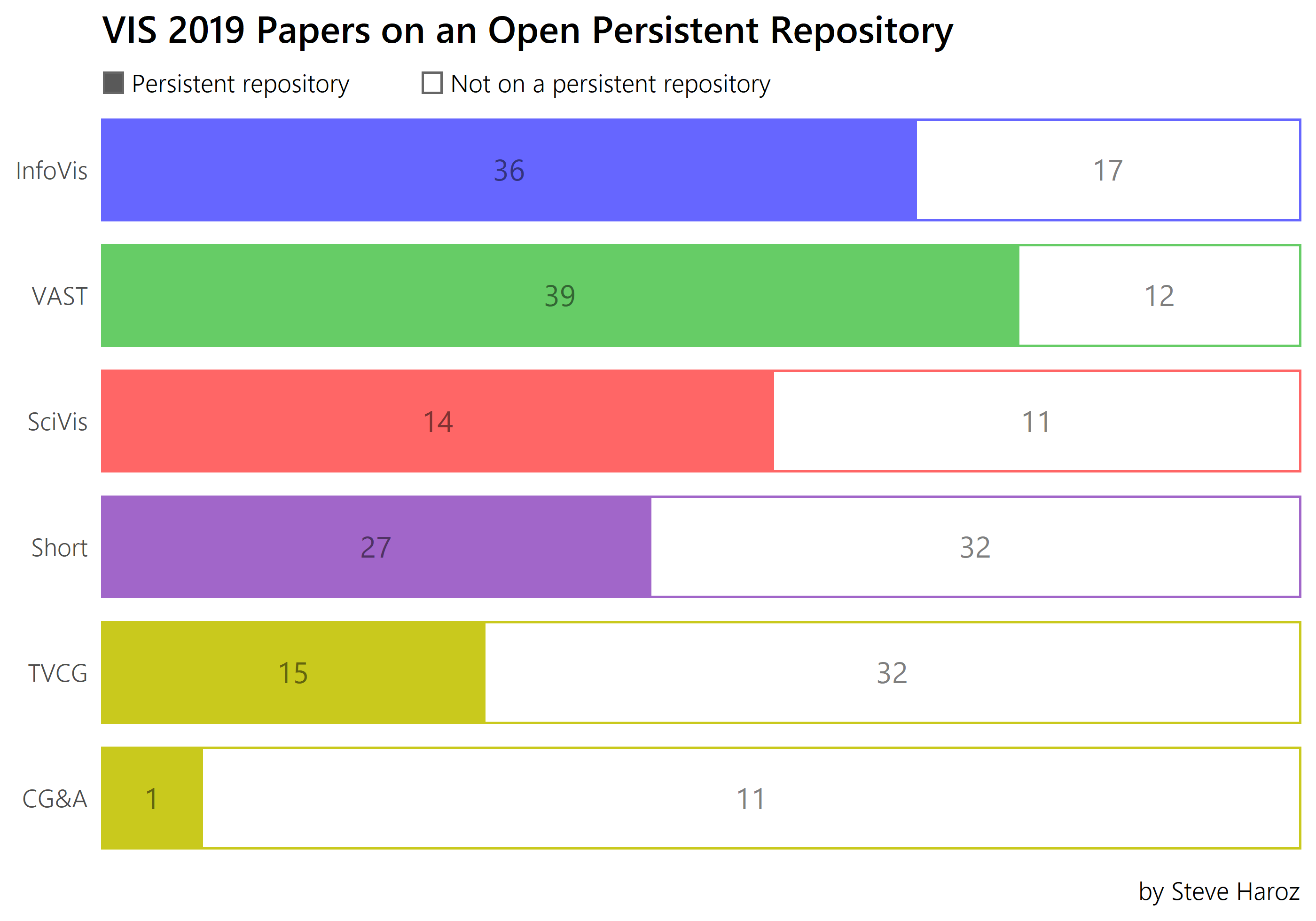

At 53%, it’s excellent that the overall rate has passed half. But it’s still not 100% despite zero cost to authors. It’s also not 65%, despite what was announced at the conference.

The variability between review tracks is substantial. The short paper track is new, yet it’s starting out as the worst conference-direct track. Meanwhile, the journal article authors are continuing an abysmal rate of sharing their research.

What counts as an “open persistent repository”?

The criteria for what sites are considered reliable on OAVIS is based on the FAIR principles, Plan S, and Plan U. The content of the repository must be:

- Freely accessible – No signup or membership requirement

- Discoverable – If it can’t be found on commonly used search engines, does it exist?

- Persistent – There needs to be an explicitly stated plan for long term persistent (example). If an institution has a great plan for long term persistence, they can write it down in their repository’s about page.

- Immutable – A unique identifier points to a specific version of the research that cannot be changed. Updates are fine as long as previous versions are transparently maintained.

Personal websites and github repositories do not meet these criteria.

There’s more

Stay tuned for the next parts of this series of posts coming up very soon. All code and data for this series of posts is available at https://osf.io/4njyf

Sincere thanks to Andrew McNutt and James Scott-Brown, who help with data collection and making thumbnails!