The purpose of Open Access Vis is to highlight open access papers, materials, and data and to see how many papers are available on reliable open access repositories outside of a paywall. See the about page for more details about reliable open access. Also, I just published a paper summarizing this initiative, describing the status of visualization research as of last year, and proposing possible paths for improving the field’s open practices: Open Practices in Visualization Research

Why?

Most visualization research papers are funded by the public, reviewed and edited by volunteers, and formatted by the authors. So for IEEE to charge $33 for each person who wants to read the paper is… well… (I’ll let you fill in the blank). This paywall is contrary to the supposedly public good of research and the claim that visualization research helps practitioners (who are not on a university campus).

But there’s an up side. IEEE specifically allows authors to post their version of a paper (not the IEEE version with a header and page numbers) to:

- The author’s website

- The institution’s website (e.g., lab site or university site)

- A pre-print repository (which gives it a static URL and avoids “link rot”)

Badges

Open Access Paper

Open Materials – Materials needed to replicate and reproduce the methods

Open Experiment Data – Raw measurements needed to reproduce results

Preregistered (New this year!) – A timestamped immutable plan

More detail on the badges is in the about page.

New this year

1. The preregistered badge is awarded to papers that deposit an experiment plan (and preferably analysis plan) onto a timestamped and immutable repository prior to collecting and analyzing data. The goal is to signify papers that make any flexibility in the experiment variables as transparent as possible. Adding this badge brings OAVIS more fully in line with the official Center for Open Science badges. Only one paper earned the badge – Hypothetical Outcome Plots Help Untrained Observers Judge Trends in Ambiguous Data by Kale, Nguyen, Kay, and Hullman.

2. The “explanation” link was moved into the paper description under the abstract. It’s not really a badge that signifies something about the transparency of the research. Like the video, it is bonus content to help explain. So it is between the abstract and video. (And it was really hard to settle on any objective definition of a “good” explanation.)

3. Papers with a PDF on a personal or institutional site but not a reliable open access repository won’t not have all features enabled. Putting a paper on a reliable preprint server like osf.io/preprints or axiv.org takes minutes – far less time than it takes to make a video or explanatory medium post. So those bonus links and features are disabled until the paper is posted to a reliable location.

4. Note for next year: This is the last year that I will do any searching beyond URLs that are already in the paper. From now on, if there are any additional materials, data, or other information, put a URL in the paper. Heck, put it in the damn abstract! (Example)

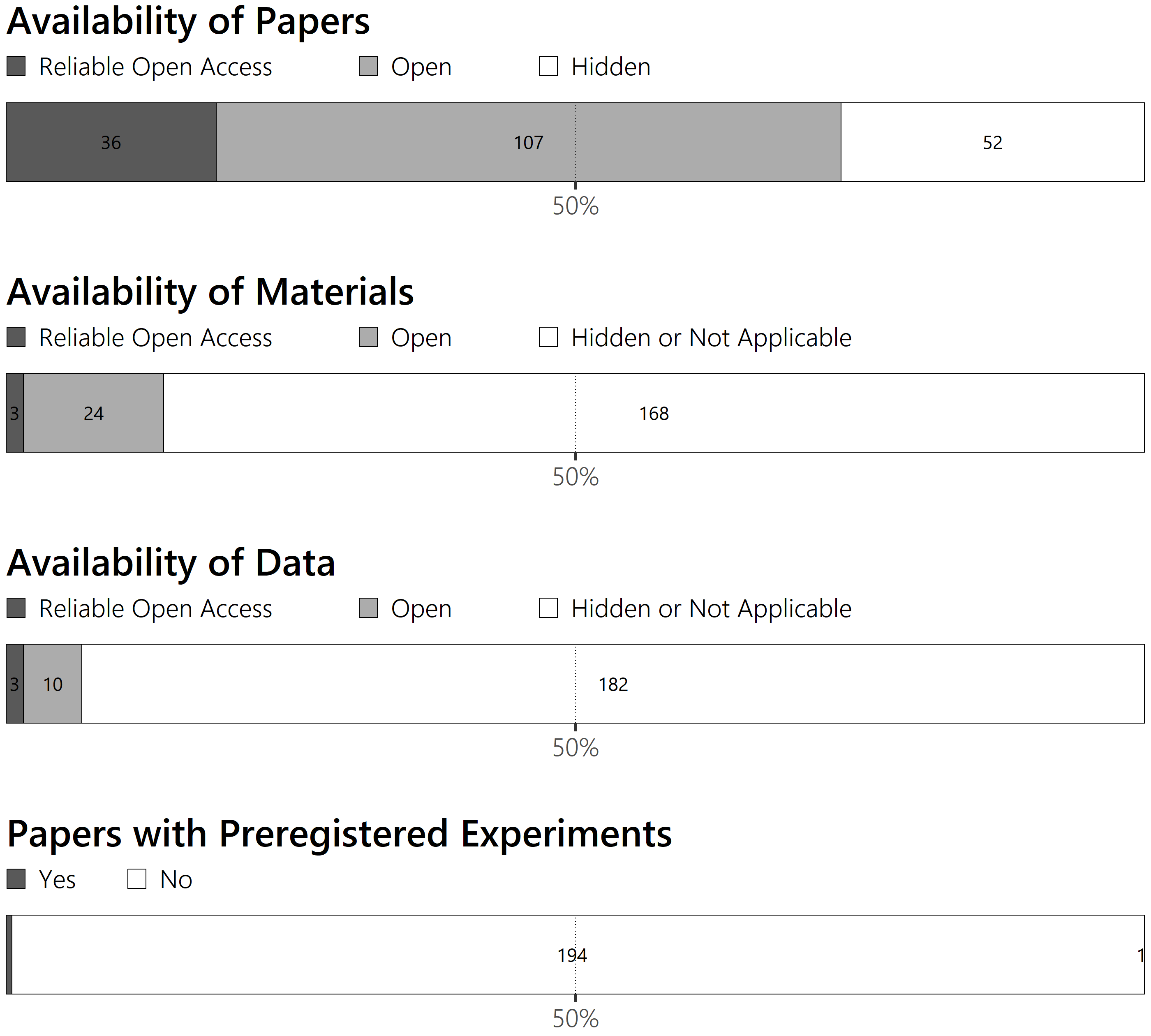

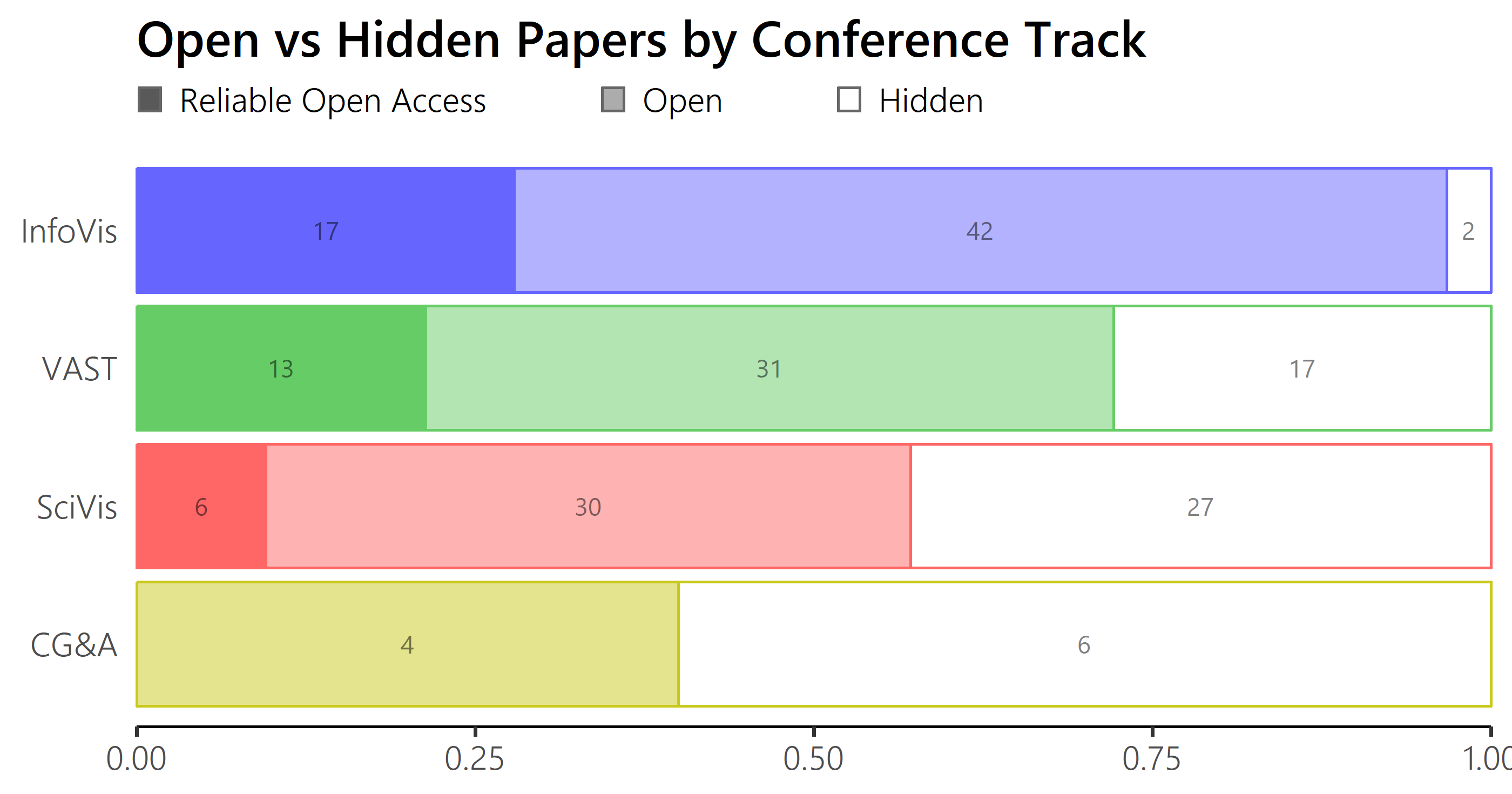

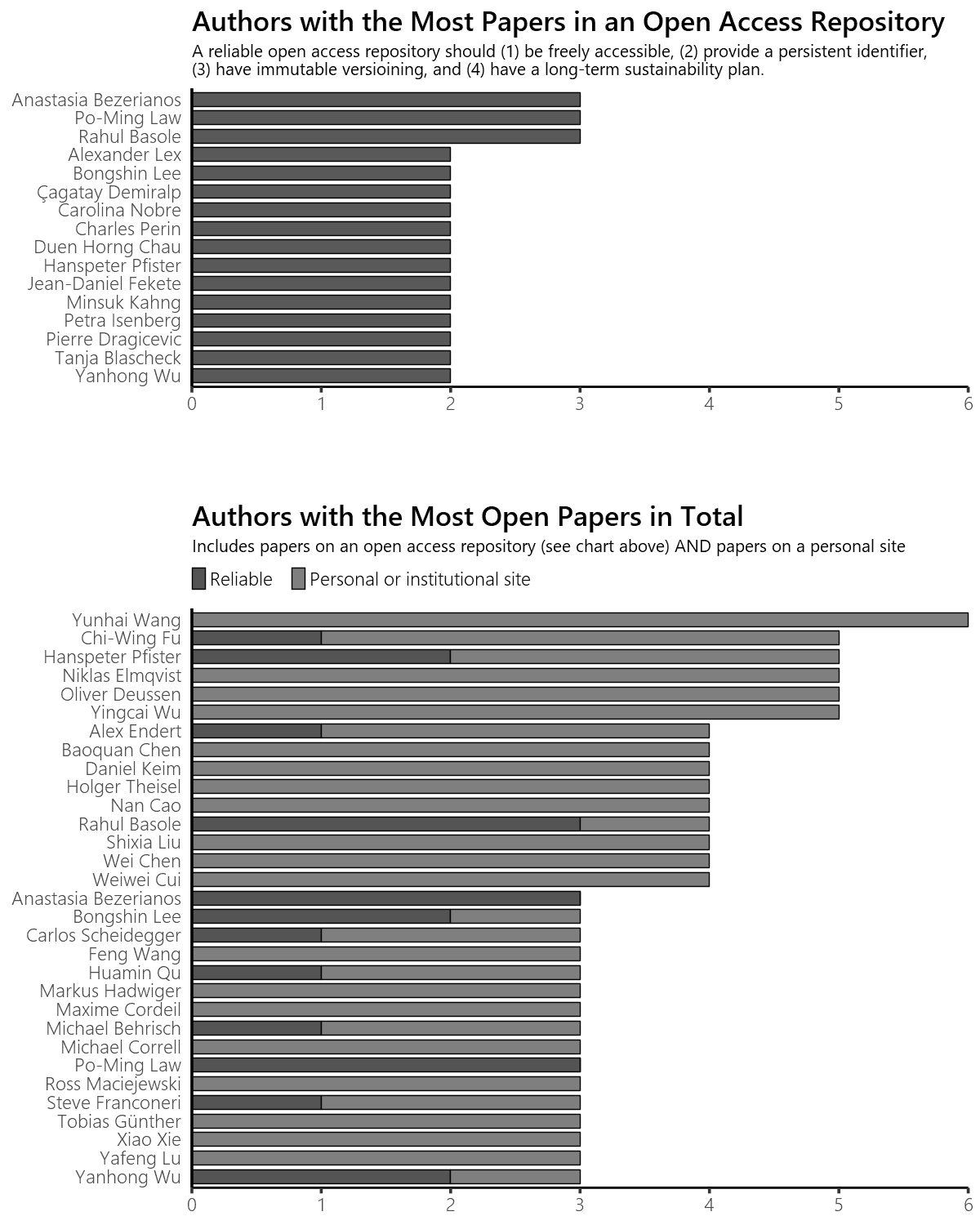

Stats for 2018 (Updated Nov 12th)

How do I share my work?

I will update these graphs occasionally over the next couple weeks as I find shared papers and materials. All you have to do is:

- Upload your work to any open access repository (such as the Open Science Foundation). It takes under 5 minutes!

- Make a GitHub issue with the new information or to point out information we missed.

Thank you

Sincere thanks to Noeska Smit, Isaac Cho, and André Calero Valdez, who help with data collection and making thumbnails!

Edit: For now, I’ve removed the “most hidden” chart, at least until the data is more complete and accurate.

Hi Steve,

I came across your page which is a good effort. I was, however, surprised to find my name on the “most hidden list”. All my papers, with nearly no exception, are available from my website http://www3.cs.stonybrook.edu/~mueller/research/ (follow the individual research area links). Some even have special informative webpages with additional information such as videos. Actually, I take great pride in making my papers available quickly.

Your blog inspired me to checked Google if it actually “knows” about the pdfs and again, to my surprise, it did not for those I tested. Maybe you know why and how to correct this (without uploading all to arXiv which will take time because there are so many of them).

Thanks

Hi Klaus. Thanks for your comment. The data was compiled by manually googling each paper, so it’s not too surprising that we missed some. And, yeah, Google is not finding your PDFs for some reason. Maybe something like a “robot.txt” file is preventing google from scanning your site fully? I’m not sure.

I wouldn’t worry about posting everything you’ve ever done to arxiv. That would be pretty time consuming. Just start with the most recent ones… for example, your recent VIS papers 🙂

I am really intrigued by the idea of improving openness of VIS research. After attempting to put my work on OSF, I have some thoughts and concerns.

Visualization research is multidisciplinary. Different people identify themselves differently: some are scientists, some are designers, and some are engineers. It seems to me that the open practices you are advocating lean towards the science and psychology side of visualization research. I think different kinds of visualization research have different requirements for openness and different platforms might be more suitable.

For perceptual study paper, it seems that an important rationale for openness is replication and I think OSF is fine.

But for survey papers, replicating findings is less important (Different people have different world views and hence summarize information differently. What is more important seems to be whether the authors are able to offer a new perspective of the surveyed papers.) I feel that the reason why we want openness for survey papers is to keep the survey dynamically changing and updating over time. A website that allows collaborative editing serves this purpose better than OSF (like this: https://fredhohman.com/visual-analytics-in-deep-learning/).

For papers that are more design and engineering focus. Replication is never a consideration and we never talk about “replicable design”. If a paper is about a system, openness is still important though partly because a written paper alone does not capture interactions very well. To spread ideas and scaffold future vis system research, videos and working prototypes are good ways to improve openness. That is why GitHub is great for making system papers openly accessible.

Right now, I don’t see a single platform that allows different types of vis researchers to open their work. You described how we could make experiment papers more accessible but I can’t find guidelines for opening a research project if it is a survey or if it is more design-oriented. These are critical concerns as it affects people’s incentive to making their work more openly accessible.

I may miss something though as I had not been following the open practice stuff until I saw your tweet…

Hi Terrance. Besides the replicability, it is critical that research can be scrutinized and built upon by future researchers. For that to be possible, everything needed to support the conclusions should be available on a repository that meets the following requirements:

OSF meets those requirements, as do some other repositories like Zenodo. But a personal websites and github do not. While posting a clear explanation on a personal is a great way of publicizing research, that is a distinct goal from preserving it and enabling transparency and scrutiny.

I agree with your breakdown of the field in terms of science, design, and engineering. But engineering papers built on code and design papers built on schematics or design plans should also make that work available on a platform where reproducibility and scrutiny is possible. Github is a great place for working on such projects. But it is not reliable long term.

As for survey papers, most don’t have any information beyond what is in the paper itself. If the primary support for their contribution is a table put together by the authors, and that table is in the paper, an external repository is not necessary for scrutiny. Only the accessibility of the paper matters.

You can read more about open practices in vis here

Thanks for your work in promoting open practices in vis. The case you make is convincing and necessary and the cataloguing you have carried out both useful and likely to effect change.

I do wonder whether combining the ‘not applicable’ and ‘hidden’ categories for ‘Data’ and ‘Materials’ is sensible though. With ‘Data’ especially, there are many cases, where papers don’t include empirical experimental studies with ‘raw data’ and all exemplar data are open as ‘Materials’. One of the purposes of badges is (presumably) to be able to make a quick judgement as to whether there is sufficient open content to be able to reproduce. There is a big difference between a study with hidden data (potentially not reproducible/verifiable) and one that does not use data in the sense you have defined it (potentially reproducible).

Would it be practical to only include ‘ghosted’ badges for those cases where there are data but are hidden, and not include any reference to the badge where not applicable? It would also be interesting to see the stacked bars you have created to take into account this distinction (I understand this is extra work so understand if this is not really practical).

Hi Jo. Your intuition is right that while the ghost badge is not ideal, it is not practical for me to read all 195 papers closely enough to distinguish ‘hidden’ from ‘NA’. Watch for an upcoming blog post about a subset though…

However, we can use this information to make at least some quick judgments. Many papers did run empirical studies, yet only 13 share empirical data (only 3 reliably). Some even have “study” in the title but don’t share the data. Furthermore, I suspect that around 95% of papers need some sort of materials or code to be replicated or reproduced, but no where near that many have the open materials badge (again, only 3 reliably).

The badges are certainly an imperfect way of signaling openness, but they should be seen as an intermediate rewarding step towards mandatory open practices. They primarily raise awareness. Unless the paper is a review article, we should start asking why is there not a materials badge. Eventually, TVCG and the conference should formally adopt the TOP guidelines and begin leveling up towards level 2 (mandatory open practices) or even level 3 (designated reviewers to check reproducibility).