This is part 2 of a multi-part post summarizing open practices in visualization research for 2019. See Open Access Vis for all open research at VIS 2019.

This post describes the sharing of research artifacts, or components, of the research process itself rather than simply the paper. I refer to sharing both these artifacts and the paper as “open research practices”.

Related posts: 2017 overview, 2018 overview, 2019 part 1 – Updates and Papers, 2019 part 3 – Who’s who?

Open research artifacts for 2019

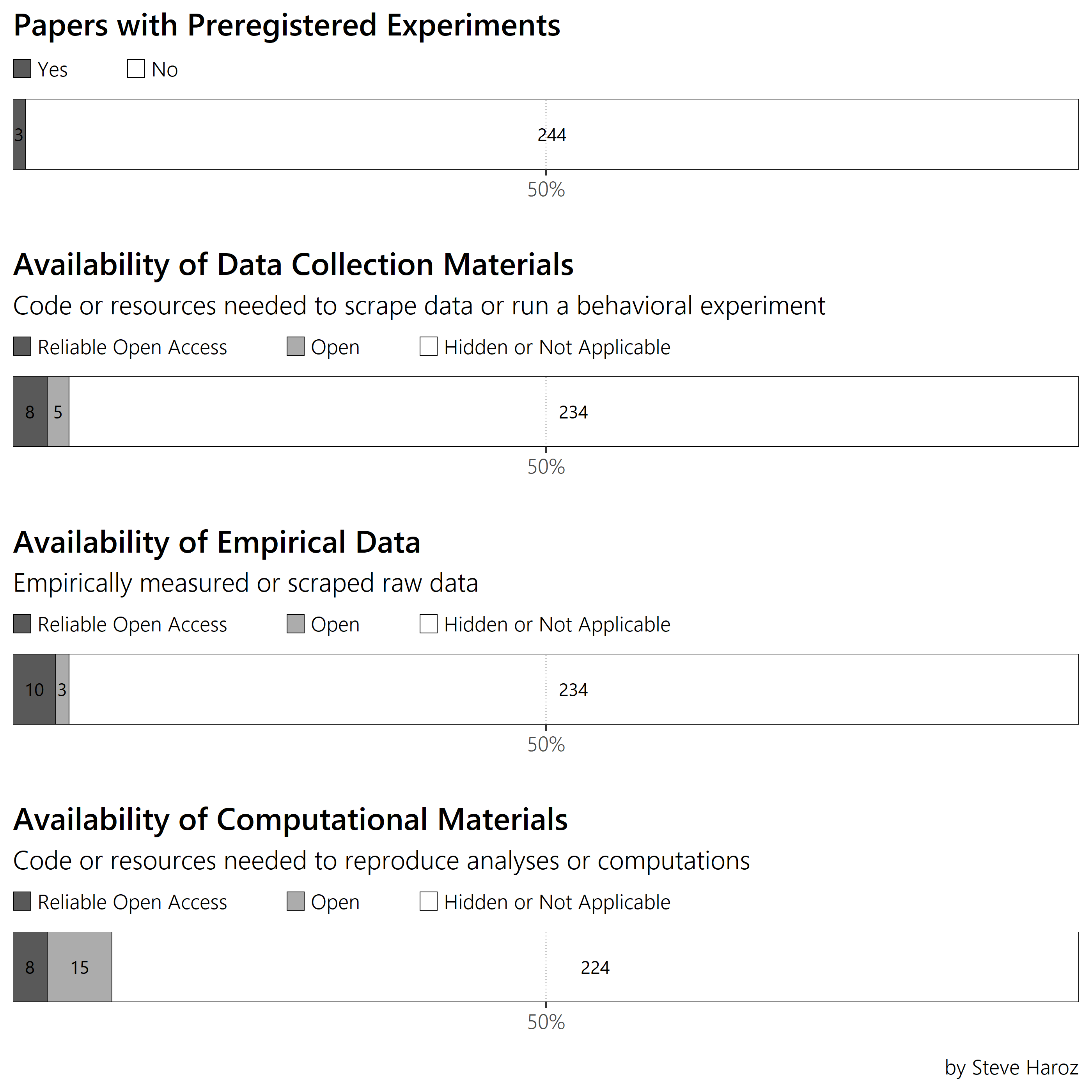

I’ve broken research transparency into 4 artifacts and counted the number of papers on an open persistent repository that linked to each. I’ve given “partial credit” if the component is available but not on a persistent repository.

Preregistration: A timestamped and immutable research plan describing research questions, independent variables, dependent variabes, and covariates. See my FAQ

Data collection material: The materials or source code needed to replicate the data collection, whether it’s conducted via a behavioral experiment, questionnaire, or scraping. FAQ in chapter 7. Full criteria.

Empirical Data: Raw data measured by the authors to support the conclusions of an empirical study. Tips and guides. Full criteria.

Analysis/Computational Material: The materials or source code needed to reproduce analyses and computations. FAQ in chapter 7. Full criteria.

The Results

Preregistrations have tripled at VIS! There was only one preregistered experiment published last year, and now there are three. While it’s a laudable improvement, a lot more than three papers include an experiment with explicit strong claims. In other words, many papers include substantial hidden flexibility, which harms the validity and credibility of the field.

Materials and empirical data, however, are largely unchanged from last year, although there is increased use of persistent repositories. Because VIS has no policy or author guidelines regarding open research practices, these low numbers are not very surprising.

Is anything open?

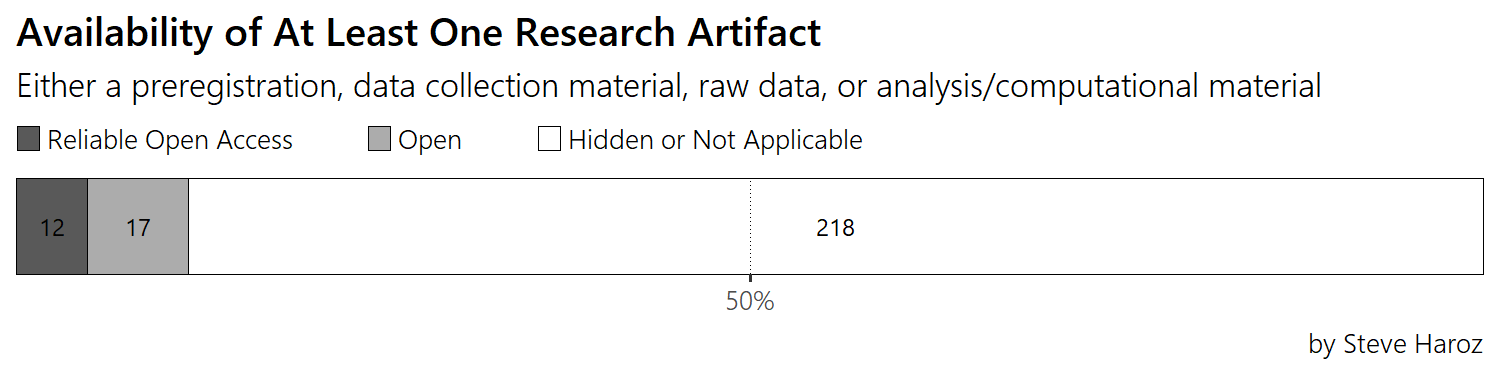

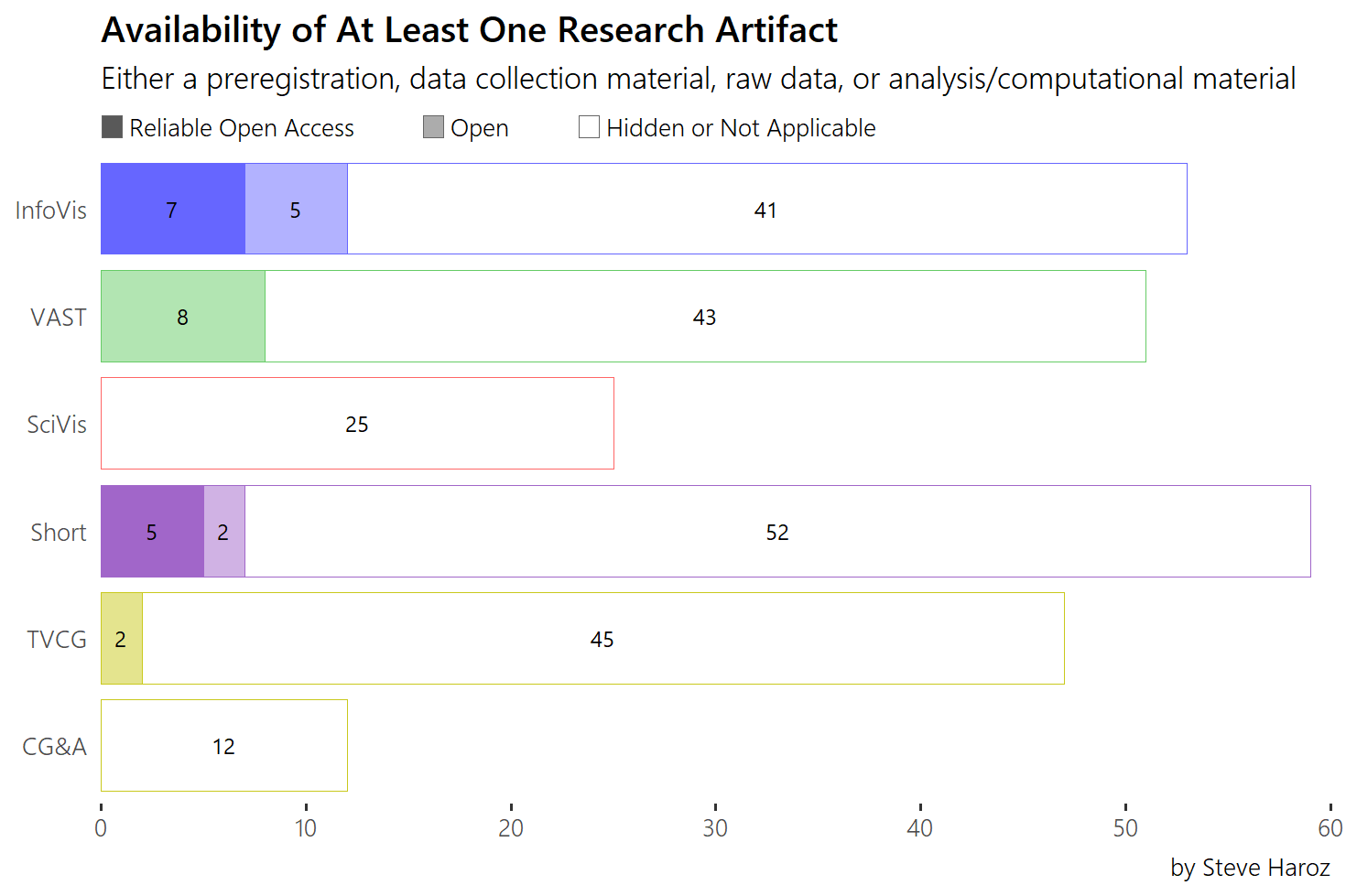

A fair point to make is that not every research project includes every research artifact. A purely computational project, for example, may not include any of the empirical artifacts like preregistration, data collection, or empirical data. So I’ve made the most generous possible estimate of openness by asking “Is anything open?”.

This measure simply asks if either a preregistration, data collection material, empirical data, OR analysis/computational material is available. The results are still abysmal.

InfoVis and the Short papers (mostly the infovisy ones) have at least some research artifacts on a persistent repository. The other review tracks seem to have little awareness of or interest in research transparency. Without any official policy for informing authors, it’s not clear if it will ever change. But it’s worth asking if hidden research is credible for citation. If it is, why is it being hidden? To increase the credibility and applicability of their work, authors can try out the Open Science Framework, read the links in this post, have a look at some of my guides, or contact someone who has experience with open research. But the field’s credibility is suffering as long as so much research is hidden.

There’s more

Stay tuned for the next part of this series of posts coming up very soon. All code and data for this series of posts is available at https://osf.io/4njyf

Sincere thanks to Andrew McNutt and James Scott-Brown, who help with data collection and making thumbnails!