Imagine someone explaining a complex topic, like how to improve the fuel efficiency of a boat. But shortly after starting the explanation, they go off on a series of tangents about pretty boats they’ve seen, big boats, rubber ducks, submarines, and other transportation vehicles such as the new 787 by Boeing et al. Then they return to the explanation of boat efficiency without ever referencing why they brought up those strange tangents.

Tangents are confusing, and they hurt clarity. The related work section is often just a string of unrelated tangents, which is a waste of the reader’s time.

Now let me make something clear: I am not necessarily saying that papers should cite fewer sources. Instead, each citation should serve an obvious, specific purpose. And if that purpose is so tangential to the structure of your argument that you need to put it in what amounts to a citation dumping ground, then it isn’t needed.

What’s the purpose of a citation?

1. Support a claim

Offloading proof is the primary reason to cite an article. It allows you to make a statement without having to rehash an entire body of literature. However, make sure to provide a succinct description of the conclusion. Otherwise, its relevance is unclear.

Good example: Task A has good performance for this condition [citation] but bad performance for that condition [citation].

Bad example: Smith et al. have looked at this task [citation]. (without any description of what was found)

2. Contrast what is and isn’t already known

This type of citation clarifies that a question is not already answered. It’s still supporting a claim, but it says “we don’t want you to confuse the problem we’re solving with the problem that another article solved”. However, only use this type of citation to clearly contrast very similar work.

Good example: Although technique A is better than B for task X [citation], we don’t know if that benefit extends to task Y.

Bad example: Our paper is not like X [citation], Y [citation], or Z [citation], as those works are on completely different topics in completely different fields.

3. Motivate the question

Research should be more than an intellectual exercise. A paper should contextualize the knowledge that will be obtained. It’s tough when you’re deeply embedded in solving a problem to remember to explain to others why solving the problem matters. But it’s necessary.

However, motivation can often be accomplished through a general citation-less discussion (e.g. “imagine if someone wants to…”). Motivating via citations becomes useful when you want to show that people are already doing a task that you want to improve or understand.

But be succinct! You don’t need an exhaustive list in the beginning of a paper. One way to simplify the motivation citations is to put examples in a table or appendix. More than a couple examples in the body of a paper tends to be more distracting than informative.

4. A hat tip to tools and methods used

Stating which tools were used can certainly clarify how the authors accomplished something. But there’s a line between being helpfully descriptive and overly verbose. It’s important to cite a new or little known tool. Whereas long diatribes about the history of tools that most are already familiar with are unnecessary. The degree of explanation can range from a citation-filled subsection devoted entirely to explaining a very new tool to a single citation to just a mention for the most familiar tools.

The same is true for experimental methods. You really don’t need to describe Fechner’s early use of the method of limits, nor is it appropriate to cite the patent for the tachistascope for timed presentation. Use common sense here.

Good example: We compute position via the recently released toolkit X [citation or just link].

Bad example: We ran this experiment on the world wide web [citation] which runs on a network [citation] of transistor-based computing machines [citation].

Why you should NOT cite an article

1. This topic is kinda sorta like that topic

These citations often make up a lot of the bloat in introductions and related work sections. The question to ask is whether your argument will be weakened by dropping the citation. For kinda-sorta-like citations, dropping them has no effect on the logic of the paper.

Bad example: We examine the benefit of curvature in edge bundling. Euler’s work from 300 years ago looked at curves too [citation]. Here are 47 other things that were round…

2. Just because a reviewer said so

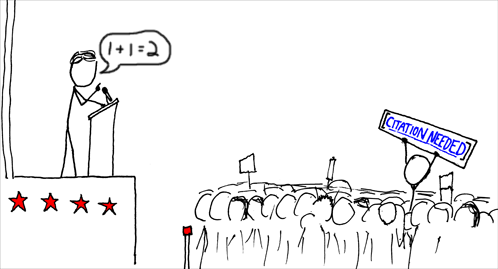

This mistake is more the fault of reviewers than authors. It’s understandably tough to rebut a review when your submission’s acceptance relies on the reviewer’s approval, so many authors just add suggested references without question. These unexplained citation demands are common in reviews, which is unfortunate because they’re rarely productive:

“This work fails to cite: [Smith 2001] and [Jones 2002]. Reject!”

However, it is completely valid for a review to say that a submission fails to support a pivotal claim. In that case, it’s not even necessary to specify which reference is missing. Though a review should try to suggest references that can support the claims. Such review comments can help and substantially strengthen the final publication.

But where SHOULD citations go?

Cite references in a narrative support of your argument throughout the introduction and the body of your paper. A good introduction gets to the point quickly. It guides the reader through the motivation for studying a problem and sets up the main questions. Then each section can cite support for any additional claims or expectations not discussed in the introduction.

The “related work” section seems to mostly be a phenomenon of computer science publications. Other fields and sciences manage just fine without it, and I can attest that InfoVis and CHI papers can be written without this unneeded section.

I think the reason that related works sections are prevalent in Vis and CHI is because of the weird culture we have of “engineers doing science, and scientists doing engineering.” So in a lot of cases we have papers that don’t fit the mold of “make an empirical claim, and then support it” or even “make a theoretical claim and then defend it.”

That means we have some weird incentives:

1) We’re small enough + have few enough top tier venues for publication that we may not be able to shrug off ignoring a reviewer/try something potentially unpopular.

2) We’re new enough + engineer-y enough that the “wow factor” is a big part of the impact of a work. (Other than your best paper a few years ago, how many InfoVis best papers have been theoretical or empirical work rather than cool systems ?)

3) Unstructured enough that we don’t have a ton of codified theory/methods to build off of. I can run a (for example) psych experiment and trust my reviewers to believe that I’m attacking an important problem and am using the right methodology with reasonably little verbiage. In Vis I might have to do the equivalent of “cite Euler about curves” just to argue for the first principles of the paper. As an example, almost all VIS papers I can think of that are ABOUT experiments will cite Cleveland & McGill even if they are not adopting their specific methodology, or building off of one of their results.

Here are some additional things that citations might do in our hybrid Frankenstein’s monster of a field. Note that these are not particularly GOOD reasons, but they are certainly other things to consider when people are setting out related works sections in VIS papers.

Citations can:

1) Establish novelty. We’re one of the few fields where (rightly or wrongly), we can get points not just for inventing new techniques but being the first to apply them to a new domain or domain problem. Whether this is wrong or not is sort of related to the answer to the question “if I make a vis tool for my collaborators and they cure cancer, should I get a vis paper out of it, even if the vis itself isn’t that exciting?” But in any event this is where the ” big boats, rubber ducks, submarines” type of citations slot in. We have to prove a negative (“nobody has ever made a tool in this area”) and so the quasi-related citations are actually a form of evidence (“these are the closest papers we could find, and they aren’t even that similar”).

2) Establish ethos/authority. In science this 100% shouldn’t matter (hence double blind reviews and so on). But it very much does. Having a lot of citations is evidence that you’ve read a lot of papers which is evidence that you know what you’re talking about. Having a lot of citations is a type of superficial polish that can make or break papers. It’s like dazzling people with equations when they aren’t needed. The downside is it will make the paper more difficult to read, but if it convinces people to let it in, then the incentives aren’t aligned with legibility (even assuming we can overcome the disincentives for academics to admit “I don’t understand this point, or why this work was cited”).

3) Create hypertexts. By this I mean creating a connected graph of papers to help me accomplish some rhetorical task. For instance, I might want situate a work within a particular project. I might cite a bunch of work which lays out the timeline of the particular tool I am discussing (I might write about the initial design for InfoVis, write about the longitudinal evaluation for CHI, and then write about domain results for a journal in the collaborator’s field, for instance). Similarly, there might be a number of components to my design, all drawn from different, prior projects. I might hat tip to their evaluations to justify my design without running any new experiments myself. Lastly, I might cite a group of papers to try to game the system and make sure I get the right group of reviewers (for instance if I have a work that is lacking good evaluation I might cite a large group of quasi-related evaluation-light tools to up the odds that I will get a reviewer who cares less about evaluation). I can also try to “pre-please” my reviewers – it feels good to have your work cited, so if I cite a bunch of work and I get an author as a reviewer, they will feel good about my paper.

4) Reassure the authors that the work of doing a lit search wasn’t wasted. Most of the citations that don’t seem to have a purpose are likely a result of the authors doing a check for novelty, and to make sure they aren’t re-inventing the wheel. Often this effort is time consuming. There is a bad habit of assuming that man hours spent ought to correlate with words spent in the paper. One way of justifying this habit is to treat the related work as a sort of self-contained survey paper – “I did the work of looking up the sources connected to this domain or this type of tool, so now you won’t have to!”

5) Wonk bait. “Most normal people aren’t going to read the related work section, especially if I have a separate background section, so I can throw it in for the weird people who are super invested in the literature who want one.” So here it’s not disrupting the flow of the paper, the assumption is that most readers will just instinctively skip it. I suspect some people treat methodology sections this way as well. “Most people will skip right to the ‘meat’ of the results and discussion, so it’s as though they don’t even exist when considering how the paper flows.” They are for those contrarians who say “I don’t believe you” when you say that your study attacks a novel problem, rather than the general audience who just wants you to get on with it.

That was a doozy of comment. As you said in your tweet, you seem to generally agree with the post, and you’re listing the excuses why people behave otherwise. I mostly agree that those are the reasons. But we should strive to improve and avoid codifying (or excusing) bad habits instead of rationalizing them.

Some more specific responses:

Weird incentives:

1) Vis is a small field, so it’s tough to push against reviewer demands: agreed, which is why reviewers need to be clear about why they request citation.

2) Michael Bay movies have a “wow factor”. After 5 minutes they’re boring. Vis should strive to avoid being the Michael Bay of academic research.

3) Lack of theories is exactly the reason to work on them. The huge citation count of C&G should incentivize more theory papers.

Citation can:

1) I’ll extend the novelty idea further, proving novelty can only be done by citing everything that has ever been published. That’s impossible, so only cite if it’s really confusing that there’s a difference (see point 2 in the post).

2) Don’t establish authority by citing shit. Reviewers need to be better about seeing through that bullshit.

3) Creating hypertexts is not the purpose of a paper. Googling keywords does a better job of that anyway.

4) “There is a bad habit of assuming that man hours spent ought to correlate with words spent in the paper”. Very well said!

5) If readers can skip over a section and still understand the paper, then it doesn’t need to be included.