When you see p = 0.003 or a 95% confidence interval of [80, 90], you might assume a certain clarity and definitiveness. A null effect is very unlikely to yield those results, right?

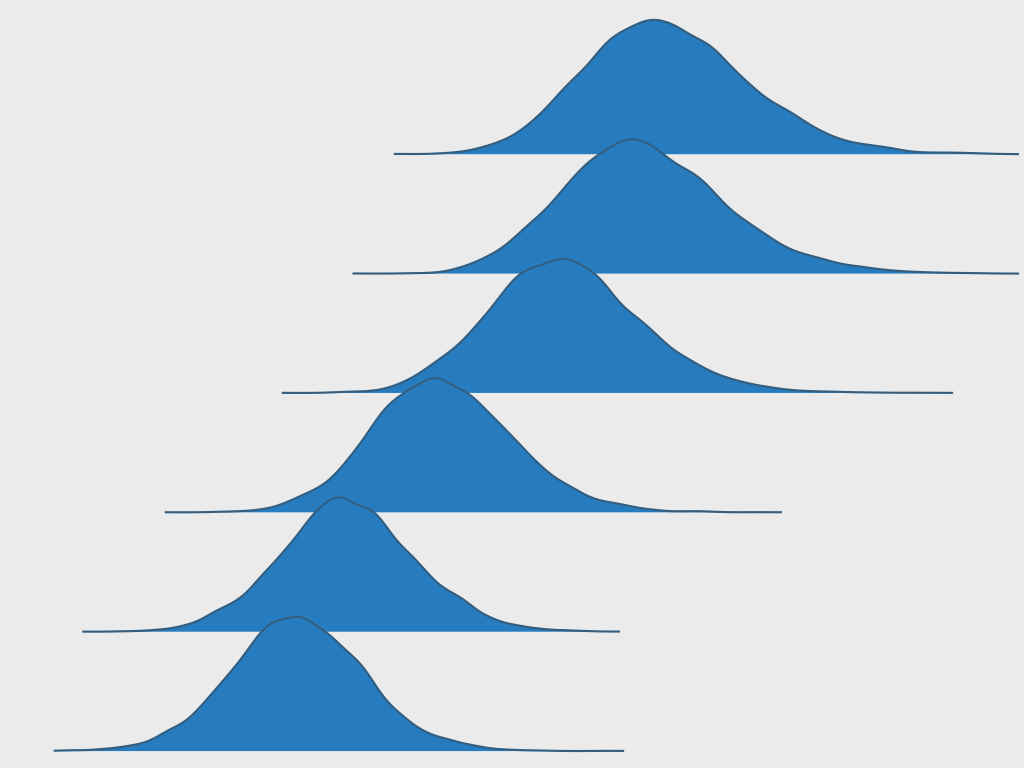

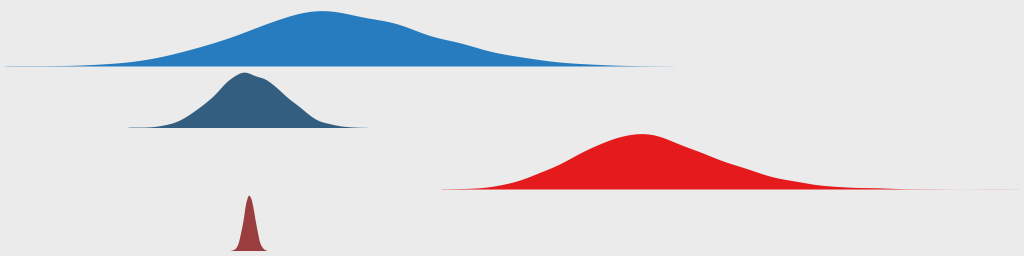

But be careful! Such overly simple reporting of p-values, confidence intervals, Bayes factors, or any statistical estimate could hide critical conclusion-flipping errors in the underlying methods and analyses. And particularly in applied fields, like visualization and Human-Computer Interaction (HCI), these conclusion-flipping errors may not only be common, but they may explain the majority of study results.

Here are some example scenarios where reported results may seem clear, but hidden statistical errors categorically change the conclusions. Importantly, these scenarios are not obscure, as I have found variants of these example problems in multiple papers.

Continue reading